|

|||||||||

|

|

|

|

|

|

|

|||

-------------------------------------------- --------------------------------------------

-------------------------------------------- Paul Yanik Stan Healy

|

|

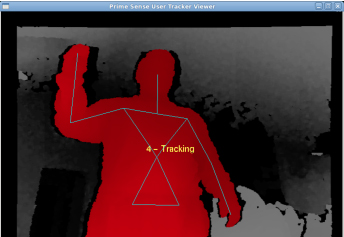

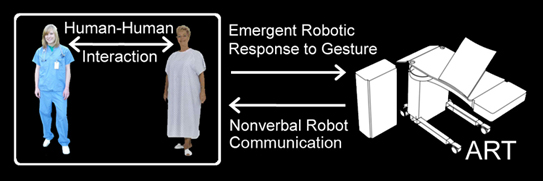

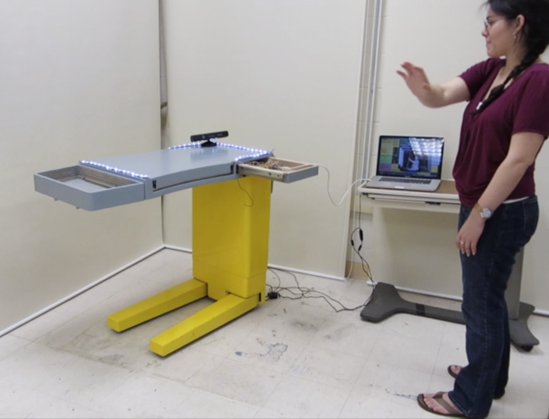

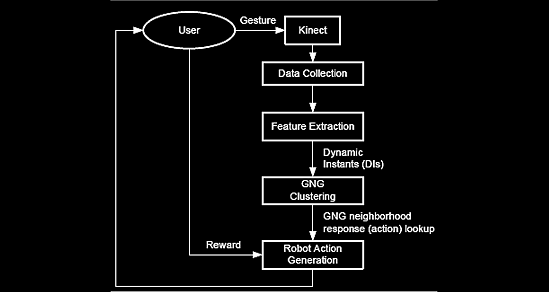

Nonverbal Human-Robot Communication o v e r v i e w : We have been developing an appropriate and effective nonverbal communication (NVC) platform for robots, communicating with people; a mode of communication that dignifies what it is to be human by not competing with us, nor imposing on our social-emotional-cognitive constitution. We have initially developed a working prototype integrated wtih the our Assistive Robotic Table (ART) with support from NSF's Smart Health & Wellbeing Program. Our non-verbal communication (NVC) is conveyed by the familiar means of Audio-Visual Communication (AVC): low-cost lighting (colors, patterns) and sounds. The NVC we designed is based on an understanding of cognitive, perceptual processes of non-verbal communication in humans. The NVC, in turn, affords a communicative dialogue (i.e. acknowledging requests, or providing requested feedback) that conveys the purpose of accomplishing tasks. Our employment of learning algorithms offers both user and robot the capacity to interrupt, query, and correct the dialogue, and conveys in the robot some semblance of emotional information (e.g. urgency, respect, frustration) at a level that is not disconcerting to the user in a way that a user might misconstrue as human.

Threatt A.L., Green K.E., Brooks J.O., Merino J., Walker I.D., Yanik P. (2013) “Design and Evaluation of a Nonverbal Communication Platform between Assistive Robots and their Users.” In: Streitz, N., and Stephanidis, C. (ed.s) Distributed, Ambient, and Pervasive Interactions. DAPI 2013. Lecture Notes in Computer Science, vol. 8028. Springer, Berlin, Heidelberg, 505–513. Yanik, P., Manganelli, J., Merino, Threatt, T., Brooks, J. O., Green, K. E. and Walker, I. D."Use of Kinect Depth Data and Growing Neural Gas for Gesture Based Robot Control." Proceedings of PervaSense2012, the 4th International Workshop for Situation Recognition and Medical Data Analysis in Pervasive Health Environments. May 21, San Diego, California, pp. 283-290. Yanik, P. M., Threatt, A.L., Merino, J., Manganelli, J., Brooks, J. O., Green, K. E. and Walker, I. D. “A Method for Lifelong Gesture Learning Based on Growing Neural Gas." Proceedings of HCI International 2014 (Crete, Greece, July 21-27, 2014). In M. Kurosu, Ed., Human-Computer Interaction, Part II, HCII 2014, LNCS 8511, Springer International Publishing Switzerland 2014, pp. 191–202.

|

|

||||||

|

|||||||||

|

|

|

|

|

|

|

|

|

|

|